About the program

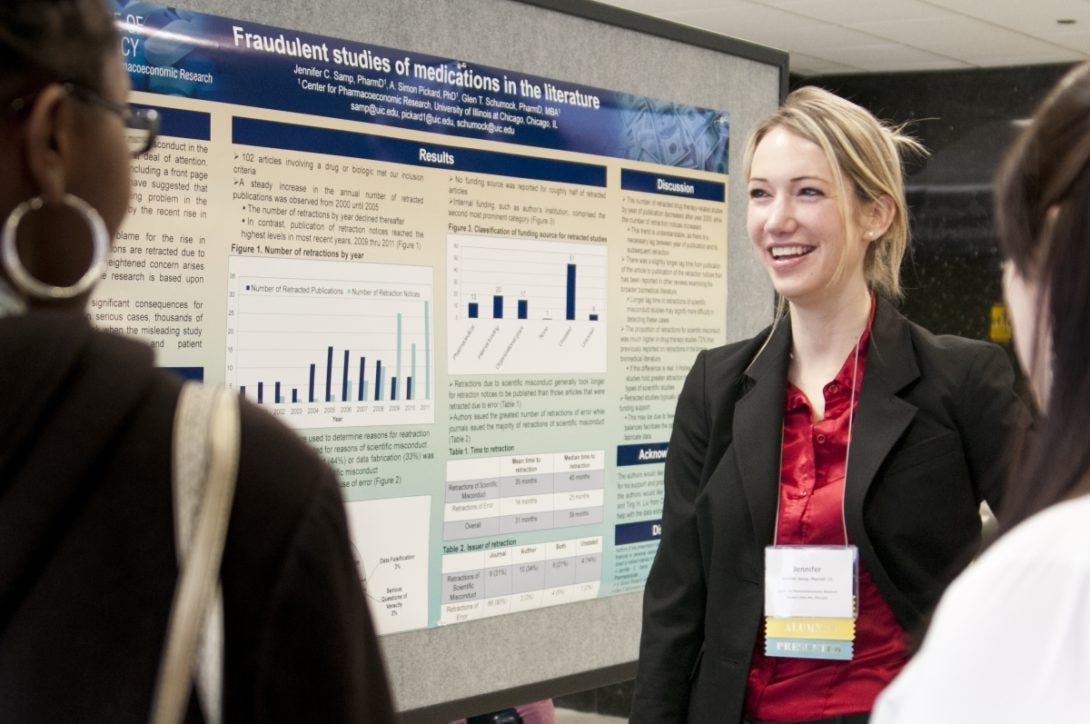

The PhD in Pharmacy is a highly competitive, STEM-designated graduate program that attracts students from all over the world. Housed in the UIC Department of Pharmacy Systems, Outcomes and Policy (PSOP), the PhD program is supported by PSOP faculty and staff who are dedicated to the education of future pharmacists and pharmacy researchers.

The program provides intensive coursework and strong emphasis is placed on combining fundamentals of statistics and research design with theoretical frameworks from decision and information sciences, economics, psychology, epidemiology, communication, public health, and education.

Using research to inform better policies and practice Heading link

With today’s emphasis on both the cost and quality of health care, opportunities abound for those with research expertise in pharmacy systems, outcomes, and policy. Employers from the pharmaceutical industry, consulting organizations, government agencies, managed care companies, academia, and others are highly interested in individuals who can integrate and apply knowledge in biostatistics and research design with social sciences in order to study pharmacy services, pharmaceutical products, patient and health system outcomes, and pharmaceutical and health policy.

Focus areas Heading link

Students benefit from the research strengths of the PSOP department, which are facilitated by local, national, and international partnerships with government agencies, insurance companies, pharmaceutical manufacturers, professional associations, and with other researchers.

Areas of emphasis and specialization for PhD in Pharmacy students include:

- Pharmacoeconomics and outcomes research

- Pharmacoepidemiology and drug safety

- Pharmacy systems and policy

- Pharmaceutical education research

Work alongside top faculty Heading link

Students in the program will work with faculty who are national and international leaders in their field. Department faculty have leadership positions in academic and professional associations, are editors of major medical and pharmacy journals, and serve on boards of pharmacy and healthcare-related companies and foundations. In addition, the department has adjunct and affiliate faculty members from government, industry, and pharmacy practice who provide expertise and mentorship.

Successful Alumni Heading link

Joining our graduate program provides access to tremendous networking opportunities through faculty and past alumni, many of whom hold senior positions and have become leaders in academia, pharmaceutical industry, and health care-related business organizations.